OpenAI’s GDPval Test Examined 44 Key Human Jobs — See How Close AI Models Came to Expert-Level Work

OpenAI has unveiled a new way to measure artificial intelligence against real-world work: GDPval, a benchmark designed to test models on tasks across…

OpenAI has unveiled a new way to measure artificial intelligence against real-world work: GDPval, a benchmark designed to test models on tasks across 44 occupations in the industries that most power the global economy.

The results suggest that leading models like GPT-5 and Anthropic’s Claude Opus 4.1 are edging closer to human-level performance in specialized jobs — though experts stress this doesn’t mean AI is ready to replace professionals.

The benchmark, announced September 25 in a research post by OpenAI, shifts the conversation away from academic quizzes and coding puzzles to focus instead on the kind of deliverables knowledge workers create every day: legal briefs, nursing care plans, financial reports, engineering designs, even slide decks.

A Benchmark Built on Economic Value

OpenAI says it designed GDPval by looking directly at the industries that contribute most to GDP and the occupations within them that account for the bulk of wages. The final dataset spans nine sectors — including healthcare, finance, law, manufacturing, retail, media, government, real estate, and wholesale trade — and covers 44 knowledge work roles from nurses and financial analysts to editors, lawyers, and software developers.

Unlike traditional benchmarks that use multiple-choice questions or synthetic prompts, GDPval tasks were created by experienced professionals with an average of 14 years in their fields. Each task mirrors actual work products, such as a compliance officer drafting a regulatory memo, or a manufacturing engineer preparing a 3D design presentation. In total, GDPval includes 1,320 tasks, with a 220-task “gold set” released publicly for researchers.

The realism is deliberate. As OpenAI puts it: “GDPval tasks are not simple text prompts… the expected deliverables span documents, slides, diagrams, spreadsheets, and multimedia.”

Also Read: How Nvidia’s $100 Billion Bet on OpenAI Could Lock In the Future of AI Power

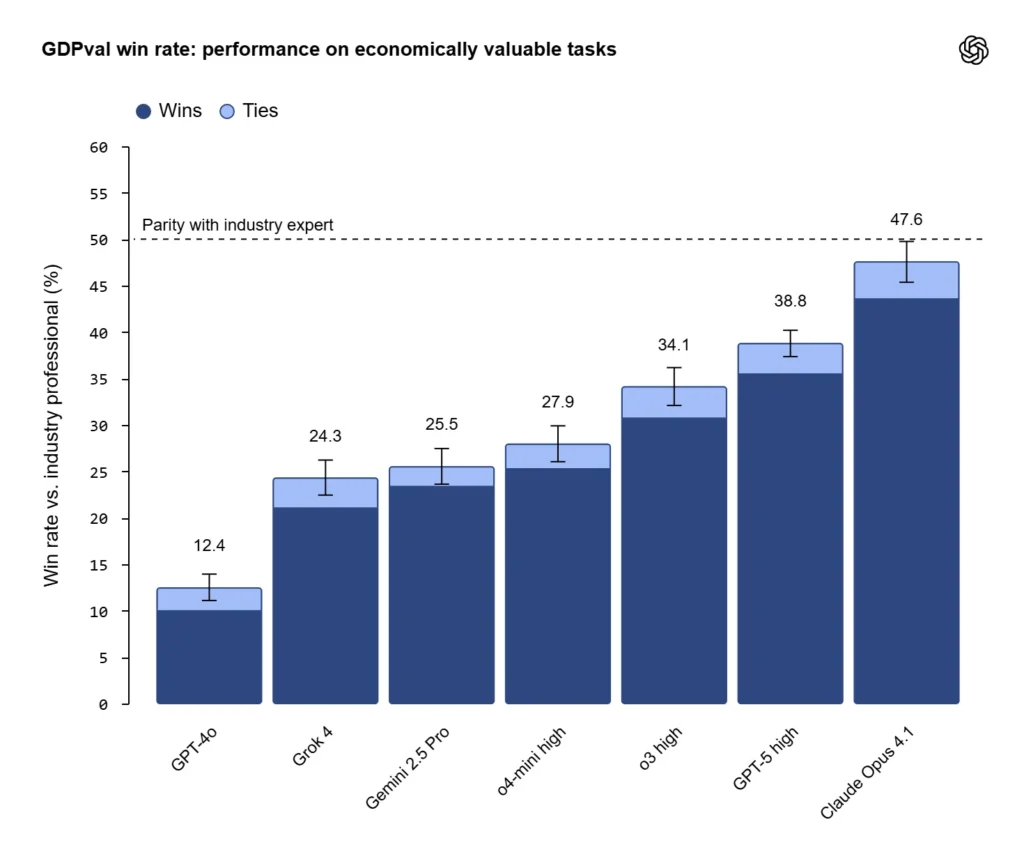

How Models Performed

To evaluate the benchmark, OpenAI tested its own models — GPT-4o, o3, and GPT-5 — alongside Anthropic’s Claude Opus 4.1, Google’s Gemini 2.5 Pro, and xAI’s Grok 4. The outputs were then compared against human-generated work in blind reviews by industry experts.

The findings:

- Claude Opus 4.1 achieved a combined win+tie rate of 47.55%, excelling in aesthetics like formatting and presentation layout.

- GPT-5 High scored 35.48% wins plus 3.28% ties, totaling ~39%, with particular strength in accuracy and domain knowledge.

- Other models, including Gemini and Grok, lagged behind but still showed progress.

As India Today reported, OpenAI defines “parity” as reaching or exceeding 50% in these evaluations. That means Claude is the closest yet — not fully there, but within striking distance.

Tripling Performance in a Year

One of the most striking findings is the speed of progress. From the release of GPT-4o in mid-2024 to GPT-5 in summer 2025, model performance on GDPval tasks more than tripled.

OpenAI researchers noted that increasing model size, encouraging more reasoning steps, and giving richer task context all boosted performance. An internal experimental variant of GPT-5 pushed results even higher, suggesting further gains are achievable.

The trajectory echoes past technology waves. Just as the internet and smartphones took over a decade to move from invention to mass adoption, OpenAI argues that GDPval offers a way to ground speculation about AI’s future in data, not guesswork.

Where AI Shines — and Where It Falls Short

The benchmark shows that AI is already strong at structured, well-defined tasks: formatting reports, generating accurate summaries, and producing draft designs or financial models. In these areas, models can be 100x faster and cheaper than human professionals, based on inference time and API costs.

But limitations are just as clear. GDPval tasks are one-shot evaluations, meaning the models don’t iterate through multiple drafts or negotiate ambiguity — both of which are routine in professional settings. Writing a legal brief often requires client conversations; analyzing financial data requires refining assumptions after anomalies appear. Those workflows aren’t yet captured.

As OpenAI cautions: “Most jobs are more than just a collection of tasks that can be written down.”

Also Read: Inside Kodiak AI’s $2.5B SPAC Deal – What Its Public Debut Means for Self-Driving Freight

The 44 Jobs on the Line

The occupations chosen for GDPval reflect where AI might have the biggest economic impact. They include:

- Healthcare: registered nurses, nurse practitioners, medical managers.

- Finance: analysts, managers, personal advisors.

- Law & Government: lawyers, compliance officers, social workers.

- Manufacturing: mechanical and industrial engineers, production supervisors.

- Information & Media: reporters, editors, producers, video editors.

- Retail & Real Estate: pharmacists, brokers, sales supervisors.

By spanning industries, GDPval paints a picture of how AI might enter the workplace — not as a wholesale replacement, but as an assistant handling routine tasks across many professions.

Implications for Work and the Economy

The results highlight a pivotal moment: AI models are no longer just answering trivia or fixing code bugs. They’re generating outputs that resemble professional deliverables across 44 fields.

That doesn’t mean job loss is immediate. Instead, experts suggest the near-term effect will be AI handling routine, repetitive tasks, leaving humans to focus on judgment, creativity, and interpersonal work. If adoption unfolds this way, the productivity boost could be significant.

OpenAI frames its mission around this: keeping everyone on the “up elevator” of AI by democratizing access and supporting workers through change.

Must Read: Manus AI: What the Buzz Around China’s New AI Agent Reveals

What Comes Next

OpenAI plans to expand GDPval with more occupations, interactive workflows, and tasks that involve ambiguity and iteration. The long-term goal: measure not just if models can produce a single deliverable, but whether they can function effectively as collaborators across complex projects.

For now, the results signal two things clearly: progress is accelerating faster than many expected, and the debate over AI’s role in knowledge work is moving from the hypothetical to the practical.