Microsoft Azure Unveils the World’s Most Powerful AI Supercomputer and Its Global Network

Seattle, Sept. 20, 2025 — Microsoft has revealed what it calls the world’s most powerful AI supercomputer, housed inside its new Fairwater datacenter in Wisconsin, while simultaneously outlining plans to interconnect identical facilities across the globe.

The move signals a new era for Azure, positioning Microsoft not only as a cloud provider but as the operator of a global AI factory network designed to train and run frontier-scale models.

The Fairwater Datacenter: A New Benchmark in AI Infrastructure

The flagship Fairwater campus covers 315 acres in Mount Pleasant, Wisconsin. It includes three massive buildings totaling 1.2 million square feet and required 26.5 million pounds of structural steel, 120 miles of underground cable, and nearly 73 miles of mechanical piping. Microsoft executives described it as the most advanced AI facility ever constructed — designed from the ground up for training trillion-parameter models like GPT-style LLMs and multi-modal AI systems.

Unlike traditional cloud datacenters, which are optimized to host independent workloads (websites, apps, databases), Fairwater operates as one massive supercomputer. Its networking fabric links hundreds of thousands of NVIDIA GPUs into a single computational domain, capable of delivering 10X the performance of today’s fastest standalone supercomputer.

Global Expansion: Norway and the UK Join the Network

Fairwater is not a one-off experiment. Microsoft confirmed that identical Fairwater sites are already under construction in other parts of the U.S., and it announced new hyperscale AI datacenter projects in Narvik, Norway, and Loughton, UK.

- UK Project: In partnership with nScale, Microsoft will build the largest supercomputer in the UK. This facility will support UK-based workloads and is expected to become a cornerstone of the recently announced UK-US Technology Partnership.

- Norway Project: Working with nScale and Aker JV, Microsoft will create a hyperscale AI datacenter in Narvik, leveraging the region’s renewable energy base.

Together, these datacenters will form a distributed supercomputing system, where workloads can be trained across facilities linked through Microsoft’s AI WAN (Wide Area Network).

Also Read: Amazon Bets on AI to Help Sellers Launch Faster, Cheaper, and With Less Risk

Hardware: NVIDIA’s Blackwell at Scale

At the heart of Fairwater’s performance lies the NVIDIA GB200 server cluster, which Microsoft was the first to bring online at full datacenter scale.

- Per Rack Specs: Each rack contains 72 NVIDIA Blackwell GPUs, tied together in a single NVLink domain. That delivers 1.8 terabytes per second of GPU-to-GPU bandwidth and 14 terabytes of pooled memory per rack.

- Throughput: One rack processes 865,000 tokens per second — the highest throughput available on any cloud today.

- Scalability: Thousands of racks are interconnected via InfiniBand and Ethernet fabrics at 800 Gbps, using a non-blocking “fat tree” topology to ensure every GPU can communicate at full line rate without congestion.

Upcoming sites in Norway and the UK will leap ahead with NVIDIA’s next-generation GB300 chips, which offer even larger pooled memory and better energy efficiency.

Engineering at Supercomputer Scale

Building a supercomputer that spans multiple football fields creates unique engineering challenges. Microsoft addressed this in several ways:

- Two-Story Rack Layout: Instead of only networking racks side-by-side, Fairwater connects racks vertically, reducing physical distance and cutting network latency.

- Exabyte-Scale Storage: The Wisconsin facility’s storage infrastructure alone spans the length of five football fields, capable of millions of read/write transactions per second. Azure’s reengineered Blob Storage provides seamless access to vast AI training datasets, eliminating bottlenecks.

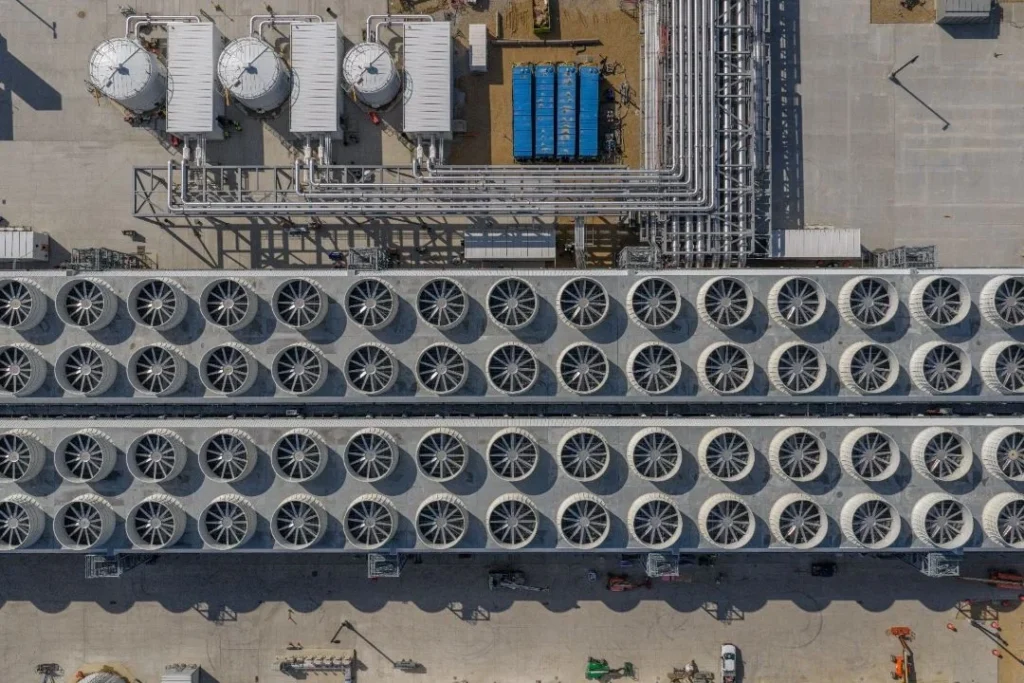

- Closed-Loop Cooling: Air cooling can’t handle this density. Fairwater uses one of the world’s largest liquid chiller plants, operating in a closed-loop system with zero operational water waste. The water is filled once during construction and then continually reused. Over 90% of Microsoft’s AI capacity now runs on this system, drastically cutting environmental impact.

Related: Microsoft’s $30B UK AI Investment – Wider Context, Impacts, and Questions

Why These Datacenters Are Different

Microsoft is branding these as AI factories, in contrast to general-purpose cloud datacenters. The distinction matters:

- Traditional Cloud: Optimized for hosting many small, independent tasks.

- AI Datacenter: Functions as one giant machine, designed to train and run foundation models with trillions of parameters.

The architecture blurs the line between cloud computing and dedicated supercomputers. For customers, this means Azure offers frontier-scale compute without the need to build or manage custom HPC systems.

The AI WAN: A Global Supercomputer

Perhaps the most transformative element is Microsoft’s AI WAN, a Wide Area Network designed to interconnect AI datacenters into one global compute fabric.

Instead of being limited by the walls of a single building, workloads can be distributed across multiple Azure regions, all functioning as part of the same global-scale supercomputer. This design offers:

- Resiliency: Workloads can failover to other sites seamlessly.

- Scalability: Tens of thousands of GPUs across regions can train a single model.

- Flexibility: Enterprises can tap into resources globally, not just locally.

In effect, Microsoft is positioning Azure as the first distributed AI supercomputer spanning continents.

Microsoft’s Strategic Play

From a journalist’s perspective, this announcement isn’t just about datacenter specs — it’s about positioning. Microsoft is making three clear statements to the market:

- Azure is for frontier AI: By emphasizing training-scale performance, Microsoft is differentiating Azure from rivals that focus primarily on cloud hosting or edge deployments.

- Partnership with NVIDIA is central: Microsoft is betting heavily on NVIDIA’s roadmap (GB200, GB300) and co-engineering infrastructure to maximize it. This may lock customers deeper into the NVIDIA-Microsoft ecosystem.

- Sustainability matters: With AI under scrutiny for power and water usage, Microsoft is leaning hard on closed-loop cooling and renewable energy sourcing in Europe.

Competitive Landscape

- Google Cloud: Competing with its own TPU v5p clusters and a focus on integration with Gemini. But Google lacks the global datacenter build-out Microsoft is touting.

- Amazon Web Services (AWS): Betting on custom Trainium and Inferentia chips. While AWS emphasizes cost optimization, it hasn’t showcased a single AI supercomputer network at this scale.

- Oracle & Others: Oracle Cloud has niche traction in HPC, but cannot match Microsoft’s tens of billions in investment and global reach.

Microsoft’s claim of operating the most powerful AI supercomputer in the world isn’t just a technical boast — it’s a competitive signal to enterprises and governments deciding where to train their next-generation models.

See Also: NVIDIA and Intel Partner in $5B Deal to Develop AI Infrastructure and PC Chips

Why It Matters for Enterprises

For businesses and institutions in the U.S., UK, Canada, and beyond, this announcement carries practical implications:

- Lower Barriers to AI R&D: Universities, startups, and enterprises can access cutting-edge compute via Azure without building their own HPC labs.

- Compliance & Locality: With datacenters in Europe, Microsoft offers compliance with regional data regulations while still connecting to a global network.

- Performance Gains: Faster training times mean models can move from research to deployment more quickly, reducing time-to-market for AI-driven products.

With Fairwater in Wisconsin and global expansion underway, Microsoft has redrawn the boundaries of cloud computing. Its AI factory network is no longer just about providing capacity; it’s about reshaping the definition of a supercomputer itself — distributed, liquid-cooled, NVIDIA-powered, and accessible through the Azure cloud.

Whether this model proves sustainable at scale — in terms of energy, cost, and diversity of hardware — remains an open question. But one thing is clear: Microsoft is making the case that the future of AI won’t run on isolated clusters.

It will run on a global network of AI supercomputers, and Azure wants to be the platform powering it.